Famously founded as an institution where any person can receive instruction in any study, Cornell is a place where robots, too, can receive programming for just about any application. Whether they are walking, swimming, driving or farming, Big Red robots are breaking all the rules.

By Syl Kacapyr

On the fifth floor of Upson Hall lies a suite of laboratories where one is bound to bump into a robot. The recently renovated building united many of Cornell Engineering’s robotics labs in one location, creating an environment ripe for teamwork, where faculty and students work together to build and program their radical ideas into reality.

The brightly-lit robotics wing is home to several research labs, including the Verifiable Robotics Research Group, where engineers generate code for everything from a reconfigurable modular robot as small as a shoebox, to a human-size robot that competed in the famed DARPA Robotics Challenge.

"We are extremely collaborative. We have a lot of joint projects and students are talking across labs, it’s a unique strength of Cornell robotics," says group director Hadas Kress-Gazit, associate professor in the Sibley School of Mechanical and Aerospace Engineering. "Another strength is our connection to the ag college, our connection to the architecture college, our connection to Weill Cornell Medicine. We have a lot of unique things in-house that aren’t engineering."

Many other universities are collaborative by nature, says Kress-Gazit, but at Cornell "we celebrate collaboration."

One of the many projects in Kress-Gazit’s research group includes a team of nine researchers from three universities and two Cornell departments. PERISCOPE—or Perceptual Representations for Actions, Composition and Verification—is a $7.5 million endeavor that attempts to solve one of the biggest challenges in robotics: creating autonomous systems that can anticipate and correct their mistakes.

This challenge is especially important as robots attempt to enter spaces once only inhabited by humans—roadways, warehouses and natural spaces such as fields and airways. Places where humans know to expect the unexpected.

"You’re talking about a system that’s in the physical world, and the physical world is not really easy to model," says Kress-Gazit, who is conducting the research with her Sibley School colleague, Professor Mark Campbell, among others. "Whenever you model it you’re making some assumptions. But what happens if those assumptions fail? How do you recover and how do you repair? Those are the kinds of questions we’re looking into."

To test the programming, the team will deploy several robots to patrol an area while searching for and retrieving small objects. Clutter within the environment and changes to each robot’s gripper will test their ability to detect and recover from challenges they weren’t specifically programmed to overcome.

A second project partners with the Raymond Corporation, a leading manufacturer of forklifts and provider of material handling solutions. The company has engineered driverless forklifts and is now seeking to automate entire warehouses.

"The idea for the project is to use some of the techniques we’ve developed to be able to reduce the time needed to automate these warehouses while still maintaining guarantees of correctness and ensuring safety," says Kress-Gazit.

Several other researchers within Upson Hall’s robotics wing are focused on autonomous vehicles, including Campbell, who’s research group has engineered Cornell’s only fullscale autonomous cars, and professor Silvia Ferrari, who is programming micro-aerial quadcopters and other flying robots using neuromorphic processing—a new class of control algorithms that mimic neural activity in the brain.

New approaches to social robots

Step inside Cornell’s Collective Embodied Intelligence Lab and you might be surprised to see a chunk of wood being devoured by live termites and a small colony of ants diligently digging through an encasement.

The lab is run by Kirstin Petersen, assistant professor of electrical and computer engineering, who designs tools that can analyze and learn from social insects, and then uses that knowledge to build robots that work in similar ways.

"If you look at ants and bees and termites in nature, you see that they’re capable of remarkably complex behavior like foraging, nest construction, defense," explains Petersen, "and we can build robot collectives like them that are capable of achieving tasks in a manner that’s more efficient and error tolerant than traditional robot systems."

She’s partnered with Kress-Gazit and Guy Hoffman, assistant professor of mechanical and aerospace engineering, on a research project that examines teams of robots and people that must work together to accomplish an ad-hoc task, such as an emergency evacuation. The project advances the research field of ubiquitous multi-robot systems, says Petersen, and investigates what the robots should look like, how they should behave and how they should interact with people.

The researchers are collecting data using a combination of autonomous ground robots, miniature flying blimps, and people. Algorithms help the robots automatically modify their behavior and provide intuitive feedback based on interactions with humans and the environment.

The way in which people and robots interact has become a challenging robotics field of its own, and that’s where Hoffman comes in. Much of his research focuses on designing robots that "people can relate to from a psychological point of view," he says. His role in the project is to consider how, in an evacuation situation, people might provide instructions to robots, be guided by robots or become in need of physical assistance from robots.

"When humans and robots interact, it’s much more like a chess game," Hoffman once told a crowd at a TEDx Talk. "The human does a thing, the robot analyzes whatever the human did, the robot decides what to do next, then the human waits until it’s their turn again. But I want my robots to be less like a chess player and more like a duo that clicks and works together," added Hoffman, who has a background in animation, music and acting, and has collaborated with sociologists and behavioral economists for his research.

Some of Hoffman’s creations include Blossom, a robot with a wool-knitted exterior whose movements resemble bouncing, stretching and dancing; Vyo, a desktop assistant that controls smart appliances through physical icons and gestures instead of touchscreens and voice commands; and Shimi, a smartphone speaker dock that moves to embody the music it plays.

Soft, shape-changing robots

When designing robots that must navigate perilous, unpredictable environments, why not find inspiration in the creatures that call those environments home? Mother Nature is, after all, an engineer.

In the Organic Robotics Lab led by Rob Shepherd, associate professor of mechanical and aerospace engineering, researchers combine materials science principles with advanced manufacturing techniques to develop soft materials that can mimic abilities found in the natural world—materials that help robots crawl like a worm, swim like a fish, change color and texture like an octopus, and touch like a human.

One of his latest projects—a collaboration with chemical and biomolecular engineer Lynden Archer—is an aquatic, soft robot inspired by a lionfish and its distinctive dorsal spines. The robot’s silicone skin and flexible electrodes allow it to bend and flex as a fish would. The soft design supports a synthetic vascular system capable of pumping a blood-like, hydraulic liquid that powers the robot. Impressively, the design provides enough power for it to swim upstream for more than 36 hours.

"In nature we see how long organisms can operate while doing sophisticated tasks. Robots can’t perform similar feats for very long," said Shepherd in a statement announcing the research project. "Our bio-inspired approach can dramatically increase the system’s energy density while allowing soft robots to remain mobile for far longer."

Shepherd’s soft materials are changing the way engineers think about design, and have permeated a diverse set of research fields at Cornell, from agriculture and astronomy to medicine and mood.

His research inspired Hoffman to prototype a social robot with a soft, textured skin that can grow goosebumps and spikes as a way of expressing emotion.

"This is one of the nice things about being here at Cornell," Hoffman told the Cornell Chronicle in an article announcing the robot. "Rob is right down the hall, and this is how I discovered this technology."

Shepherd is also working with researchers from the College of Agriculture and Life Sciences to develop a soil-swimming robot that can semi-autonomously explore the root systems of crops and report their health. Another agricultural project partners with Petersen to create a touch-sensitive robot that can sense the ripeness of grapes, among other vineyard applications.

A project with Mason Peck, professor in the Sibley School and NASA’s former chief technologist, conceptualized a soft, eel-like robotic probe capable of exploring the harsh conditions of other worlds, such as the ice-covered oceans of Jupiter’s moon Europa.

Biomedical researches have taken notice of Shepherd’s sensors and actuators aimed at patient care and rehabilitation. Creations include an orthotic glove—developed with Sibley School professor Andy Ruina and computer science assistant professor Ross Knepper—that is worn like an exoskeleton and provides grip strength to patients with limited mobility. And a prototype prosthetic heart made from a porous elastomer foam was engineered with a radiologist from Weill Cornell Medicine. The pores allow fluids to pump through the prosthetic organ, much like a real heart.

Breaking out

While the Sibley School is brimming with robots, it’s not the only place to find them at Cornell. The university’s unique and collaborative approach means faculty in a variety of academic disciplines have an opportunity to incorporate robotics into their research.

Knepper’s Robotic Personal Assistants Laboratory leverages insights from psychology, sociology and linguistics to build machines like his KUKA youBots, capable of collaboratively assembling furniture, and Baxter, a human-size robot programmed to learn from and work with people.

Keith Green, professor of design and environmental analysis, runs the Architectural Robotics Lab in Martha Van Rensselaer Hall, where his group designs built environments embedded with robotics, such as assistive robotic furniture and a "LIT ROOM," where the walls and ceiling change shapes, colors and sounds to create an imaginative, immersive environment.

New faculty at Cornell Tech in New York City include Daniel Lee, a professor of electrical and computer engineering focused on using a variety of robotic platforms to study how to build better sensorimotor systems that can adapt and learn from experience; and Wendy Ju, an assistant professor who is humanizing artificial intelligence in the areas of autonomous vehicles and human-robot interaction.

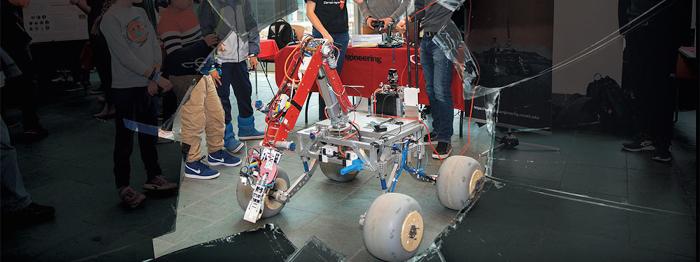

Student from across the university compete at the national level on robotics teams such as Cornell Mars Rover, which designs and builds robotic rovers to compete against others in a variety of field tasks. Cornell Engineering has also introduced a new undergraduate robotics minor, and in 2018 hosted its first Robotics Day, in which students packed Duffield Hall for a day of competitions and showcases.

"It’s an exciting time for robotics at Cornell," says Kress- Gazit.